Pilots + Passengers: Navigating a World of AI-Generated "Workslop"

Consumer Trend Seeps Into The Enterprise

In this world of uncertainty, one thing that you can count on is that what happens first in the consumer world often gets reincarnated within the confines of the corporate world, AKA “The Enterprise”. For example, asynchronous work existed before the social media revolution; however, it was social media that accelerated a steady expansion from email as our primary means of getting work done to the way we work today. The mobile revolution was another massive paradigm shifter that first started in the consumer world, then profoundly transformed not only how work gets done within the enterprise—but also how our digital products are designed—often with a mobile-first expectation or, at the very least, a cross-platform approach to digital experiences.

Before the advent of ChatGPT being placed into the hands of consumers, people were already experimenting with AI-generated imagery. One of the first pivotal moments in the Generative AI revolution was the phenomenon of everyday people updating their online photos, showcasing hyper-realistic yet synthetic images of themselves in all kinds of scenarios and getups, from dragon-slaying princess warriors to glossy business-friendly headshots to ridiculously flattering versions of themselves that made almost anyone look like a supermodel. For a while, our social feeds were full of these images as people discovered Generative AI in the early days.

Welcome to one of the earliest examples of “AI Slop”

“AI Slop,” at its core, is the digital residue of generative abundance; content that’s algorithmically spawned, barely curated, and pushed into the world at scale. It’s the blog post written in seconds but devoid of insight. The marketing email that sounds like it came from 10,000 other brands. The image that’s technically perfect but emotionally vacant. It’s what happens when generative tools become default instead of intentional. The word “slop” itself—raw, messy, thoughtless… evokes the sludge that accumulates when the machine runs on autopilot and the human steps out of the loop. What started as fun experimentation (who didn’t want to see themselves as an AI-enhanced demigod?) quickly metastasized into a flood of low-value content, spilling from social feeds to pitch decks to intranets across the enterprise. It’s the uncanny valley of productivity; lots of stuff getting made, but not a lot of meaning being created.

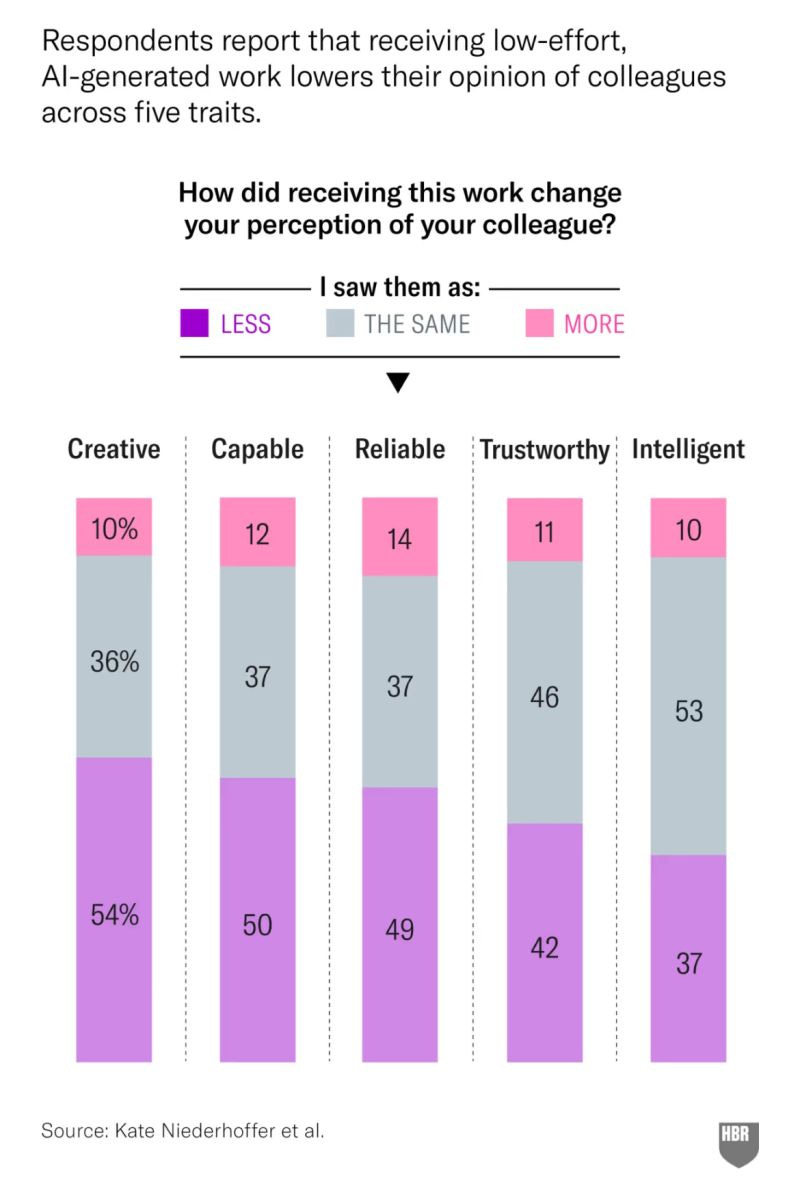

My former colleague, Kate Niederhoffer, recently co-led a research initiative in partnership with the Stanford Social Media Lab, surveying over 1,500 enterprise professionals to gain a deeper understanding of their views on colleagues using AI for work. The key finding? AI Transformation within the Enterprise is costing the company money while also damaging the reputation of some employees.

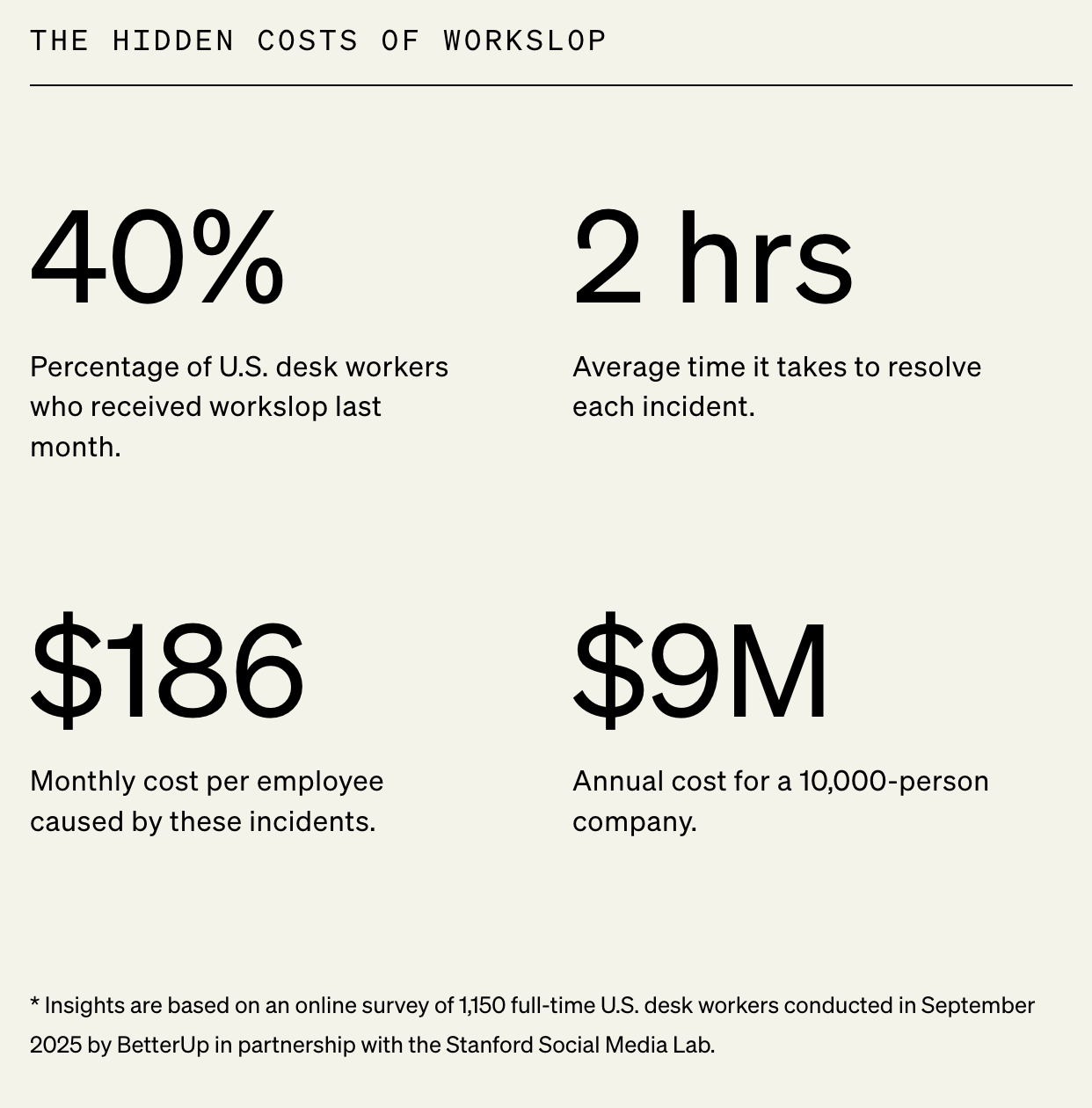

The results are striking. In the survey, 40% of enterprise professionals reported encountering “workslop” in the past month, with each instance consuming nearly two hours on average. Based on respondent salaries, the researchers estimated a productivity loss of approximately $186 per person per month for large organizations, which adds up quickly, resulting in millions of dollars in lost efficiency.

You can use AI to make your work better, says Kate Niederhoffer, vice president of BetterUp Labs and one of the researchers. But you can also use it to pretend to get 20 tasks done, she says, and just “Trojan horse” a bunch of work to your colleagues.

The emotional toll is real too: over half of participants said work slop annoyed them; 38% felt confused; 22% were outright offended. Even more concerning, nearly half of the respondents saw the creators of workslop as less creative, less capable, and less reliable. And perhaps most telling of all, 18% of those using AI admitted to knowingly sending out content they considered low-value, shallow, or sloppy. This isn’t hypothetical. It’s happening inside companies trying to embrace AI while dealing with the fallout of poor execution.

Pilots + Passengers

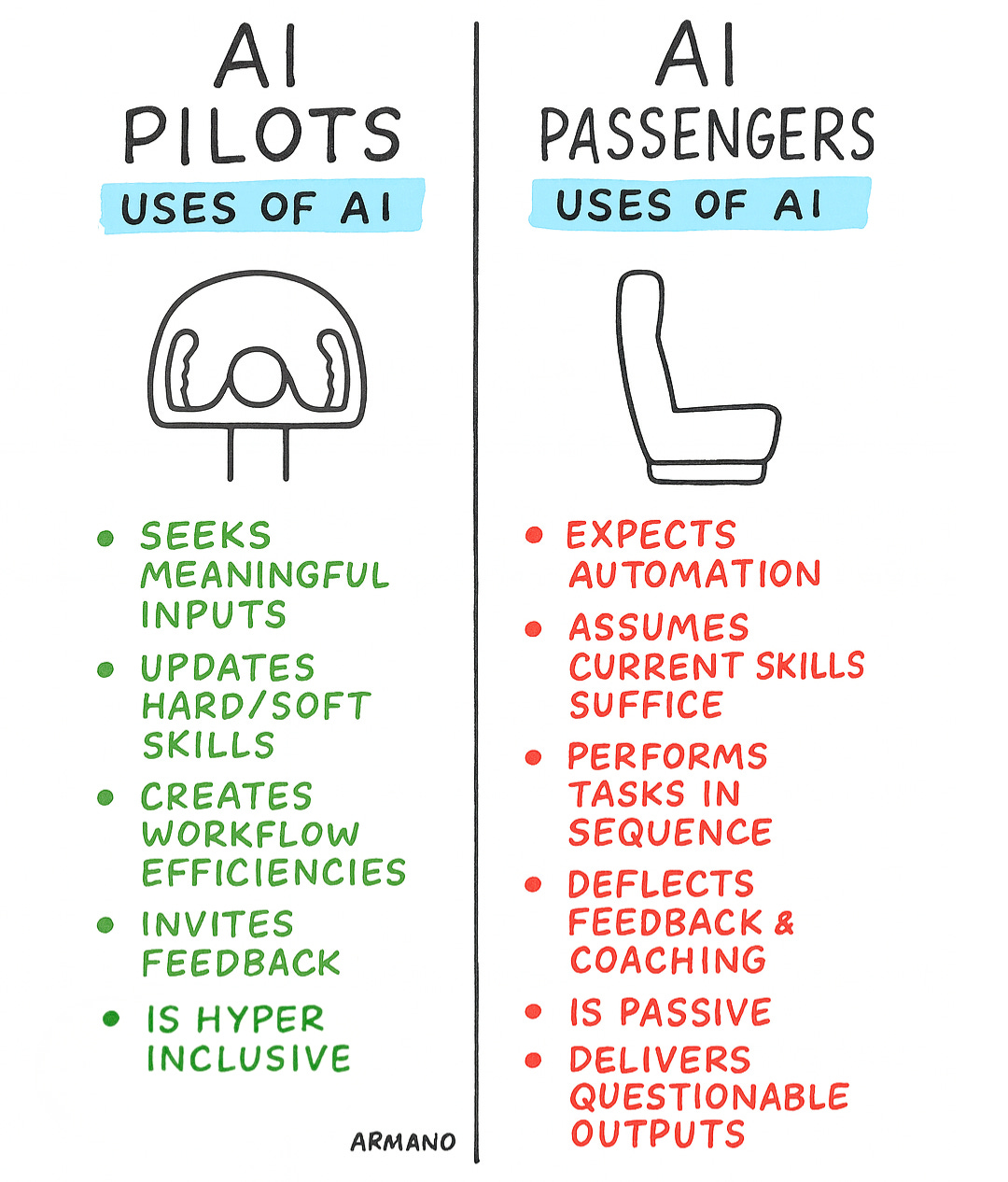

One of the sharpest distinctions in the study is between “Pilots” and “Passengers.” Pilots are the people who treat AI as a deliberate companion—questions first, prompts second, always in the driver’s seat of how they use it. Passengers lean back. They let the tool lead, losing agency in the process. That dichotomy resonates deeply with something I explored in my piece, “Intelligent Wealth vs. Cognitive Debt.” In that newsletter, I argued that too many of us accumulate mental liabilities by outsourcing too much to machines—the “cognitive debt” we should’ve resisted. Pilots, by contrast, generate “intelligent wealth”: they invest effort, maintain judgment, and get credit for it. When enterprises slip too many people into a passive mode, they don’t just lose nuance—they invite sloppy outputs, diminished accountability, and a creeping cultural debt that they’ll struggle to repay.

AI Pilots and Passengers represent a fundamental dichotomy in how professionals are engaging with AI—and why the difference matters. On the left, we see the mindset of the “AI Pilot”: someone who actively seeks out better inputs, evolves their skill set, builds efficiency into their workflow, and welcomes critical feedback to fine-tune their approach. These individuals don’t just use AI, they lead with it. On the contrary, the “AI Passenger” represents the growing risk inside organizations: passive adopters who expect automation to think, fall back on outdated skills, and treat AI as a conveyor belt for output instead of a collaborator. The distinction here isn’t just technical—it’s cultural. Pilots create momentum. Passengers create drag. And as more teams integrate AI into their daily work, these mindsets are becoming a defining line between meaningful acceleration and a flood of cognitive clutter.

The enterprise doesn’t have an AI problem—it has a human one.

We’ve handed out copilots without checking if anyone knows how to fly. The tools aren’t the issue; the mindsets are. When AI becomes a shortcut instead of a skill, what we’re left with isn’t transformation—it’s workslop. And the cost isn’t just efficiency… it’s credibility, creativity, and trust. The organizations that win won’t be the ones with the most AI adoption.

They’ll be the ones that turn their passengers into pilots—on purpose.

Visually yours,

David Armano is a CX strategist and digital transformation leader who helps professionals connect brand, product, and customer experience. He’s known for his unique approach to visual thinking and insightful, yet grounded, takes on intelligent experiences, culture, and leadership.

In addition to his day job, he writes David by Design to turn complex shifts into actionable ideas.

Very good post, David. Certainly worth a second read tomorrow.

Here’s a personal AI story: my most recent product — Creative Whacks: Deluxe Edition, Amazon link https://a.co/d/ezpTahv — was published several weeks ago.

During the past year when I was creating it, I MADE A DELIBERATE EFFORT NOT TO CONSULT ANY AI TOOLS. That’s because I didn’t want my thinking shaped in any way by a “purveyor of puréed knowledge.”

I feel that it’s important for any creator — in his or her own way — to wrap their hands around the messiness of the creative process. This allows them to deal directly with ambiguity, blind alleys that might possibly serve as stepping stones to fresh information, and weird “third right answers.” My two cents.

Again, nice post!

Appreciate you bringing this recent report into thoughtful conversation @David.

In light of this report and some challenging experiences I've encountered over the last year (e.g. being sent a 30 page document that I am asked to review + improve + edit, even when the originator of the document didn't :(... here are 2 steps I am experimenting with:

1. I have started to include an AI Disclosure on the bottom of my work products with colleagues (similar to my publicly posted thought pieces) that says if and how I used AI (I see some folks also include which model, maybe something to also consider).

2. For more engaged projects with one or more colleagues, I am also creating a working plan of "how are we going to use AI here - if at all?"

By having up front, honest discussions about when / how / why we are using AI, I believe that it will call out our higher selves... and hold us all accountable to each other (something that was clear in the report is how much trust + perception of capability was eroded when workslop was sent over the transom).

Curious how others approach these topics, too! (especially with this repot making the rounds! ;)