This past week, the world of Enterprise AI began processing the latest game-changer: Marc Benioff, CEO of Salesforce, announced that his company is going all in on “AI Agents,” industry shorthand for AI-powered applications that harness Generative AI advancements such as LLMs (Large Language Models) for personalized automation of tasks rather than creating content, whether that be in the form of text, image, or video. From Venturebeat:

Salesforce, the leading provider of cloud-based customer relationship management software, has introduced two advanced artificial intelligence models—xGen-Sales and xLAM—aimed at helping businesses increase automation and efficiency. The announcement, made today, reflects Salesforce’s ongoing investment in AI technology for the enterprise.

Developed by Salesforce AI Research, these models are designed to set a new standard for AI-driven automation, particularly in sales and in performing tasks that require triggering actions within software systems. The release comes just ahead of Salesforce’s annual Dreamforce conference later this month, where the company is expected to share broader plans for autonomous AI tools.

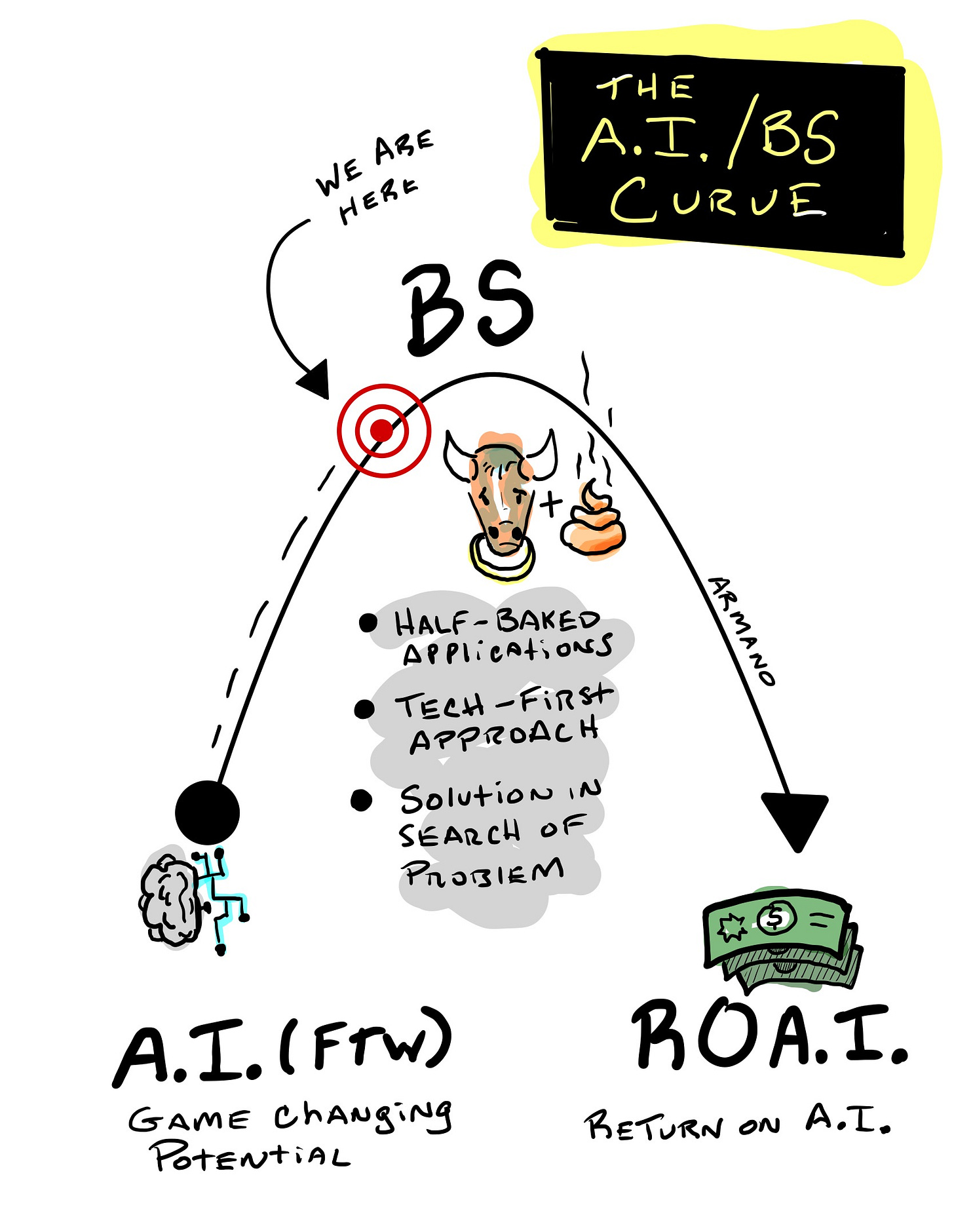

Full disclosure: I am bullish on AI, both in content creation and task automation—on the consumer and enterprise side. For example, I recently decided to take an extended West Texas desert weekend in early November, and with some prompting, AI (ChatGPT) created the perfect detailed itinerary. This would have taken hours pre-GenAI days, even with the best use of Google and the Internet. Being a GenXer who has worked at ground zero for almost every major digital transformation, including this latest one, means seeing similar patterns repeat themselves. From experience, we’re getting close to “peak BS” in AI before things return to earth, and we get busy integrating AI broadly across functions while specializing in actions, tasks, etc. But before that happens, we will have to navigate a few of the “AI/BS” issues that will come up before we get to that point. Here are a few to look out for:

Half-Baked Applications + The Interoperability Challenge

There’s a lot of money at stake in this AI arms race, which will result in the creation of an infinite number of applications rushing into the market. Regarding a general AI chatbot already being adopted within the enterprise, Microsoft’s CoPilot is in a good starting position to serve as an “IT-approved” AI chatbot for work purposes. However, it is worth noting that security issues persist. It is within the “AI Agent” part of the Enterprise AI sector, especially where it will take time for applications to get pressure tested in real-world conditions—many tools that promise to automate data analysis, communications such as e-mails, workflows, etc., will enter the market but few will initially live up to what they promise. Interoperability, the ability to work seamlessly from system to system and OS to OS, will likely become an issue and take time to iron out. Like e-commerce, Web 2.0, and other significant moments for digital transformations, thousands of startups will eventually get consolidated. While that happens, many bugs and the separation between substance and snake oil must be worked out.

Tech-First Approach

Like other digital transformations, AI transformation is often led by technology, frequently overlooking other factors. Generally speaking, any digital transformation is supported by three key pillars:

- People: Culture, HR, Ethics, etc.

- Process: Workflow, collaboration, documentation, etc.

- Technology: Systems, security, maintenance, data

Starting with technology feels intuitive because it is at the core of change management and the engine behind the promise of more productivity or efficiency. However, a tech-first approach often overlooks other critical questions associated with AI. On the people front, trust issues are significantly associated with AI, whether trust in AI-generated output or anxiety from feeling job security being threatened. Existing workflows are already complex within organizations, and many use applications that are already entrenched. Introducing new platforms that promise to automate tasks such as email responses or customer tickets is likely inevitable but cannot be addressed by technology alone. Taking a tech-first approach often leads to surfacing issues, making it clear that the tech itself may need to wait until other aspects are thought through.

Solution In Search of A Problem

Not long ago, I talked with someone who had spent considerable time with administrators from a higher-ed institution in a consulting capacity. It was observed that it was common for the administrators at this institution to work long days, often responding individually to prospective students asking questions about the institution. They are exploring AI to at least partially automate some of these email responses. This is a rare example of a problem needing a solution, where AI can be part of that solution, but based on current and past experience—I believe it is the exception and not the rule. Because AI is so powerful, the temptation will exist to invent things that can be done instead of making things that can directly address an issue. This is where an organization’s researchers, observers, anthropologists, designers and empaths will play an important role. It is often the soft skills of someone who can see a problem that should be solved and then partner with the engineer who can figure out how technology can help solve it, which is needed to provide the kind of value that generates a tangible ROI or “ROAI.”

We will be in the BS phase of AI for a little while, which will be consistent with previous innovation cycles. During the early (ish) days of social media, it took a few years in total for the marketing/comms orgs to staff up teams, incorporate social media as part of customer service, integrate social listening as core to PR media monitoring and course shift media budget and content creation to social channels. In those early years, there was churn on the software side and turnover on the talent side. And there were a lot of people who made money at the expense of organizations trying to figure everything out.

With every digital transformation, there’s a period where we have to wade through the BS to get to the business of substance...

We’ll have a little more wading to go, but the ROAI will eventually come. Until then, keep your AI/BS detectors intact.

Many good observations, David. It’s interesting to observe how things are unfolding. (I’m really glad I didn’t have to hook my wagon to this scene as a self-minted “AI-consultant; many have done that.)

I am about 80% through Ethan Mollick’s recent book “Co-intelligence,” which I’ve found to be informative. I assume you’ve read it.